OK, so this is pretty clever. Clever enough that I’m posting about it Friday night after work when I could be out doing things in the city.

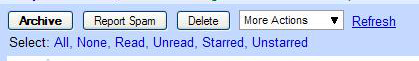

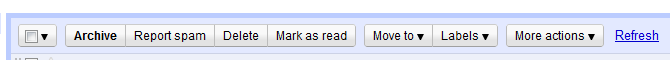

Previous E-mail Options in Firefox

As the caption reads, this is a screenshot for Gmail in Firefox under the older interface. In order to select all e-mails or certain e-mails, you can click on “All” or another text option.

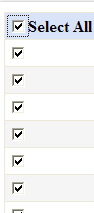

Standard Select All Check box

This is what you normally see around applications and websites to select all. It’s an accepted convention, so regular users know that by clicking on the top check box, all check boxes in the list will be selected.

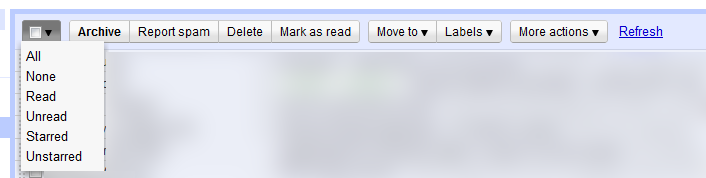

New E-Mail Options in Chrome

This is what the current e-mail options area looks like. Sorry for using a Firefox screenshot above and a Chrome screen for the new UI. The select all box looks familiar right? Wait, what’s that? There’s a drop down option next to the checkbox?!

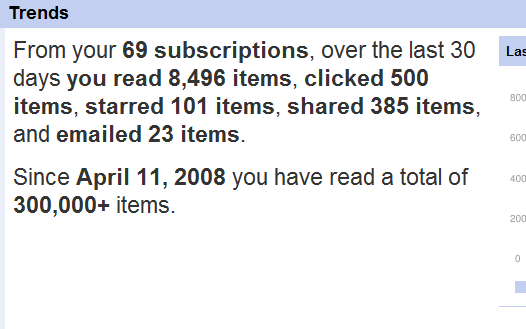

New E-Mail Options in Chrome - After Drop Down is Clicked

This screenshot shows what happens when you click it. As I said, this is some clever stuff. It goes from the clunky, non-standard convention of clicking “All” to clicking the check box if you want all items in the list. This is a good thing as general users are accustomed to the convention of clicking the check box to select all options. That in itself is good enough. If power users want to select a specific subset (e.g. Unread, Starred, etc.), they can easily do so with the drop down option.

What are the pros and cons of this change?

Off the top of my head:

Pros

- Adopts common Select All check box convention

- Reduces UI footprint (pixel real estate)

- Simplifies the UI by presenting less options up front

- The ‘None’ option does not break convention since you can Unselect all by clicking the check box a second time

Cons

- Power users now have to click twice when they only had to click once before. (Before they could simply click on ‘Unread’)

Via

Via